Machine Learning & Data Science/Analytics & NeuroAI

Welcome to my world! I’m Aya. 🌸

Rooted in a deep love for BCI, I’ve been growing in the space of Multimodal AI and NeuroAI —

from building embedded AI systems in robotics, to exploring connections between behaviors and neural activity through computational neuroscience.

Real-Time ML

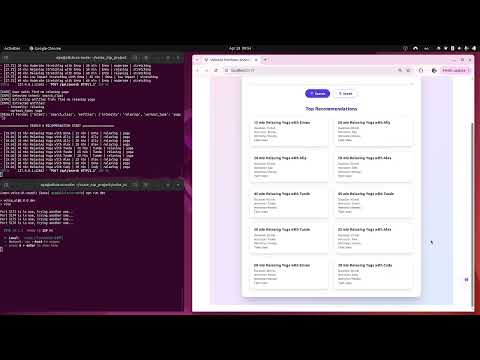

recommender system

- low latency, high accuracy

- built end-to-end, from scratch

- fine-tuned few models, incoporating recommendation for first-time-users and existing users

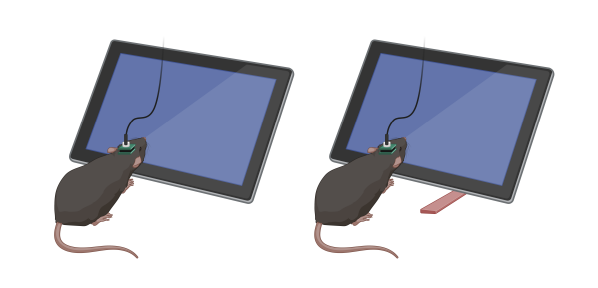

NeuroAI

neural spike decoding

- MS Thesis at med-school neuroscience lab

- Engineered statistical modelings

Embedded AI

AI in Robotics

- Demoed live to 10K+ tech/non-tech people at exihibition

- Led AI development and deployment

About

I’m a Machine Learning Engineer with hands-on industry and research experience in applied AI (NLP, Multimodal, computer vision), real-time ML system, and statistical analysis.

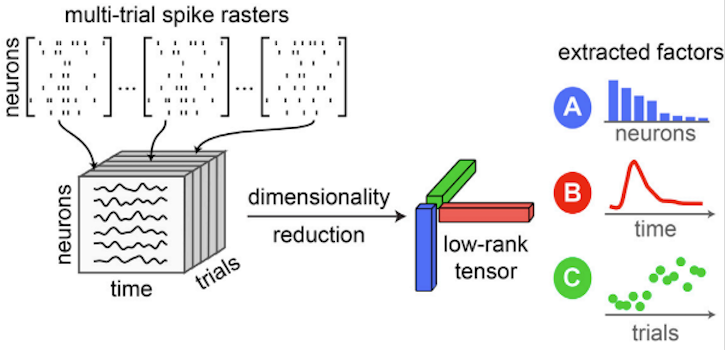

In academia research, at NYU Grossman School of Medicine - NYU Langon Hospital, Neuroscience lab, I engineered a statistical model (TCA) to decode PVN neural spike trains. With reducting the dimensionality and extracting the latent features, we revealed condition-dependent neural plasticity.

As a technical initiative, I recently built a recommendation system that takes and transcribes voice input, extracts intents and entities, and retrieves ranked recommendations, run on scalable FastAPI, React.js, and Docer interface. Intent classification: 99.8%accuracy. Entity extraction: 99.97%F1. Achieved <100ms E2E latency.

Highlighted Work

- Built end-to-end system with ASR input, intent/entity parsing, and semantic retrieval

- Achieved <100ms E2E latency (React.js + FastAPI + Docker)

- Fine-tuned DistilBERT (intent) and RoBERTa-backed spaCy NER (entity), in PyTorch; achieved 99.8% intent accuracy and 99.97% entity F1, tracked via MLflow

- Implemented cold-start personalization via Bayesian smoothing + MMR reranking

Neuroscience Thesis: TCA in Maternal Learning

Statistical modeling for neural decoding & dimensionality reduction

- Investigated social learning with neuromodulation and plasticity

- Decoded neural firings from oxytocin-linked PVN circuits, supporting complex high-dimensional data analysis

- Engineered a statistical model, Tensor Component Analysis (TCA), and developed time-series analysis pipelines for dimensionality reduction and feature extraction, implementing data processing methods including z-score normalization and Peri-Stimulus Time Histograms (PSTHs) in Python and MATLAB

- Identified increased neural synchrony, more stable assemblies, and enhanced temporal coding during active engagement, contributing to insights on oxytocin's impact on PVN plasticity

Skills

ML/AI/DS/Data Analytics

Frameworks

NeuroAI

MLOps

Languages

Education

Master’s in Computer Science, New York University – Tandon School of Engineering

Thesis: Tensor Component Analysis (TCA) for Neural Plasticity in Maternal Learning

A littel but about me 🌸

To me, machine learning feels like music — creative and precise.

Growing up playing the piano, I was drawn to the rhythm of repetition, fine-tuning, and the meticulous process of constructing each part—then weaving everything together into a finished performance.

Designing AI systems felt strangely familiar — something I naturally understood and enjoyed.

I’m most alive when I’m solving something new, hard, or unseen.

Outside of work (when I find a little time), I love traveling, dancing, and playing the piano.

Travel especially shaped me — meeting people from vastly different walks of life taught me more than any textbook ever could. Those memories are my invisible treasures.

Thank you for being here. I hope something in my life or work speaks to you. 🥰